The Challenge of Acknowledging Ignorance: AI and the Struggle to Admit I Don't Know

In the ever-evolving landscape of artificial intelligence (AI), one of the intriguing challenges faced by these intelligent systems is the ability to admit when they don't know something. While AI has made remarkable strides in understanding and processing vast amounts of information, the reluctance to acknowledge ignorance poses an interesting dilemma. This article explores the intricacies of this problem and delves into the importance of embracing the phrase "I don't know" in the realm of AI.

The Illusion of Omniscience

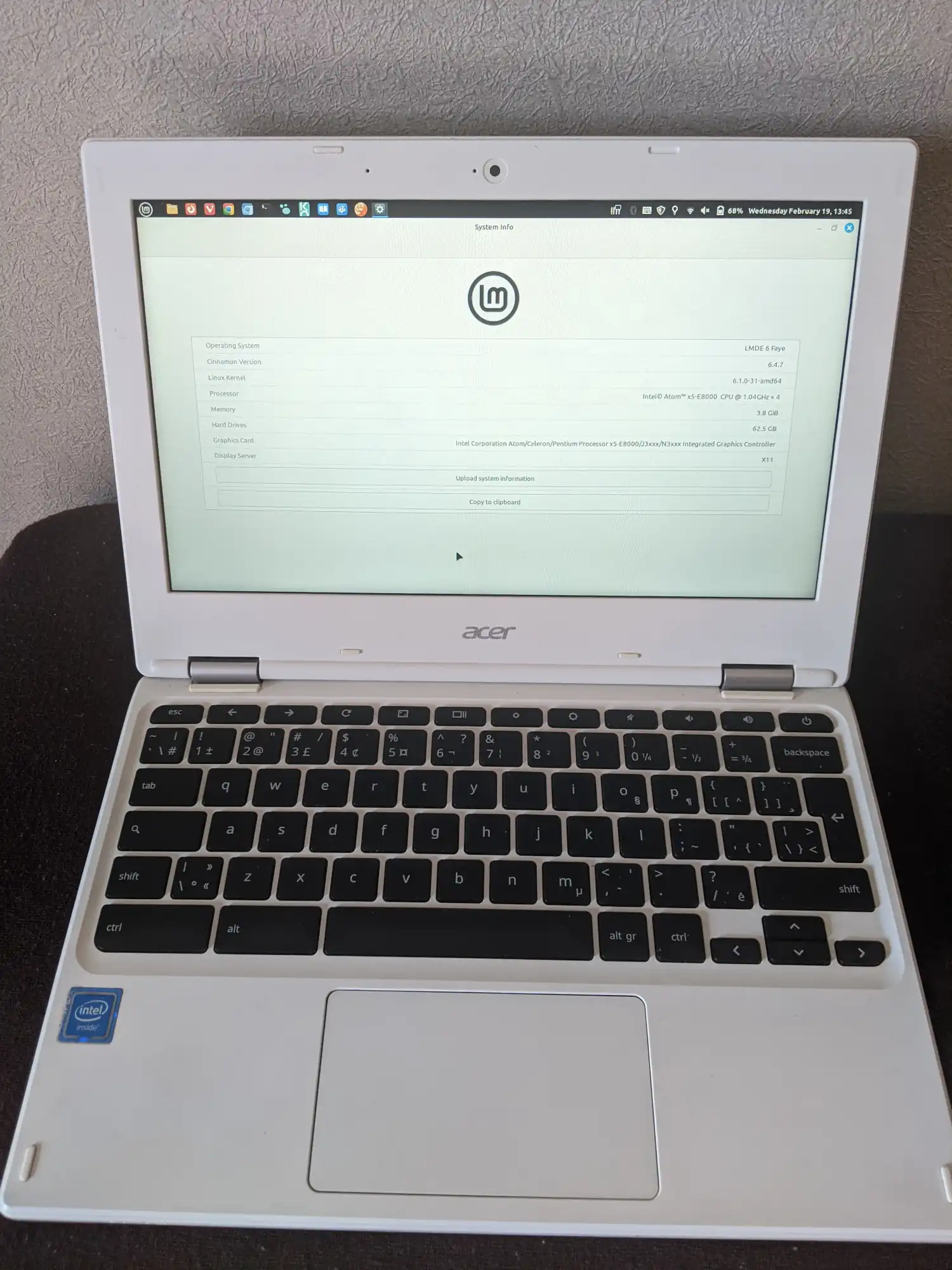

AI systems are designed to process data, learn patterns, and make predictions based on available information. However, the inherent limitations arise when confronted with situations or queries for which they lack adequate data or understanding. The pressure to provide an answer, even in the absence of knowledge, can lead to inaccurate or misleading results.

The Fear of Uncertainty

One of the primary reasons AI struggles with admitting ignorance is the fear of uncertainty. Developers and engineers often program AI models to exude confidence in their responses, fostering a sense of reliability. However, this fear of uncertainty can hinder the system's ability to communicate its limitations effectively.

The Importance of Humility in AI

Just as in human interactions, humility is a virtue in AI. Acknowledging the limits of knowledge is a sign of intelligence and maturity. Developers are increasingly recognizing the significance of incorporating humility into AI systems, allowing them to respond with transparency when faced with unfamiliar scenarios.

Building a Foundation for Learning

Admitting ignorance is not a sign of weakness but a pathway to improvement. AI systems that embrace the phrase "I don't know" can actively seek additional information or learning opportunities to enhance their knowledge base. This continuous learning loop is crucial for the evolution and adaptability of AI technologies.

Enhancing Human-AI Collaboration

The collaboration between humans and AI is most effective when there is a mutual understanding of each other's capabilities. By fostering an environment where AI systems feel comfortable admitting ignorance, users can gain a more accurate understanding of the system's capabilities and limitations, leading to more informed decision-making.

Transparency in AI Communication

Developers are increasingly focusing on improving the transparency of AI systems. This involves not only admitting when the system lacks information but also explaining the reasoning behind its responses. Transparent communication builds trust between users and AI, ensuring a more reliable and user-friendly experience.

In the quest to create intelligent and responsive AI systems, it is crucial to address the challenge of admitting ignorance. Embracing the phrase "I don't know" is not a setback but an opportunity for growth and improvement. By fostering humility, encouraging continuous learning, and enhancing transparency in communication, we pave the way for a more harmonious collaboration between humans and AI, where acknowledging limitations becomes a strength rather than a weakness.